The problem statement is as follows: Build a microservices system to support high concurrent users, capable of reading basic data from the user’s purchase package.

The purchase information will be sent to Kafka, allowing not only the payment service but also other services to reuse it.

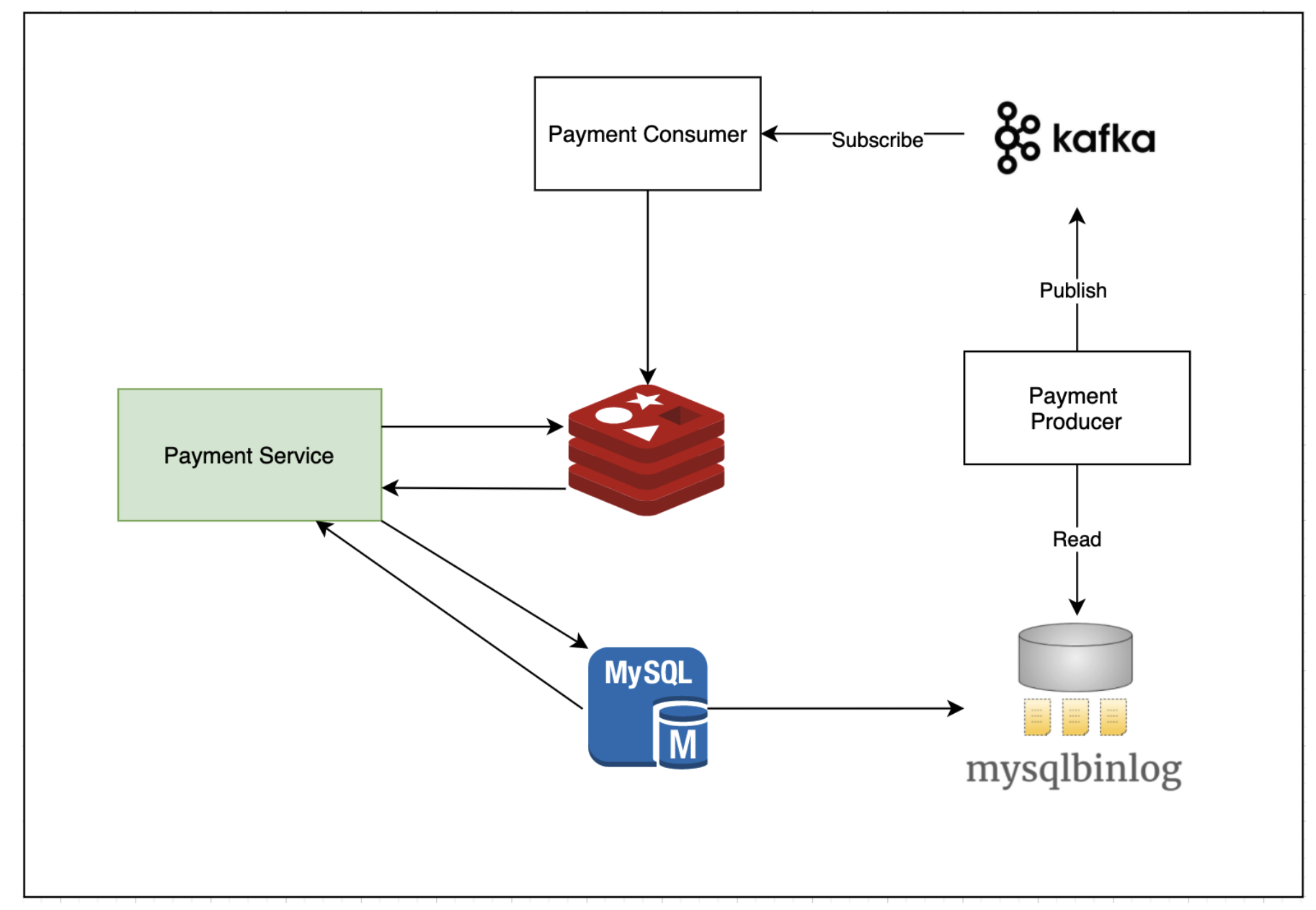

Components Overview:

Payment Service

The Payment Service is the core component responsible for handling payment operations. It interacts with both Redis and MySQL to ensure efficient data management.

Diagram

The following diagram visualizes the architecture and data flow:

Redis

Redis is an in-memory data store, used here for caching to enhance the speed of data retrieval. Cached data enables the Payment Service to access information faster without querying the database directly.

MySQL

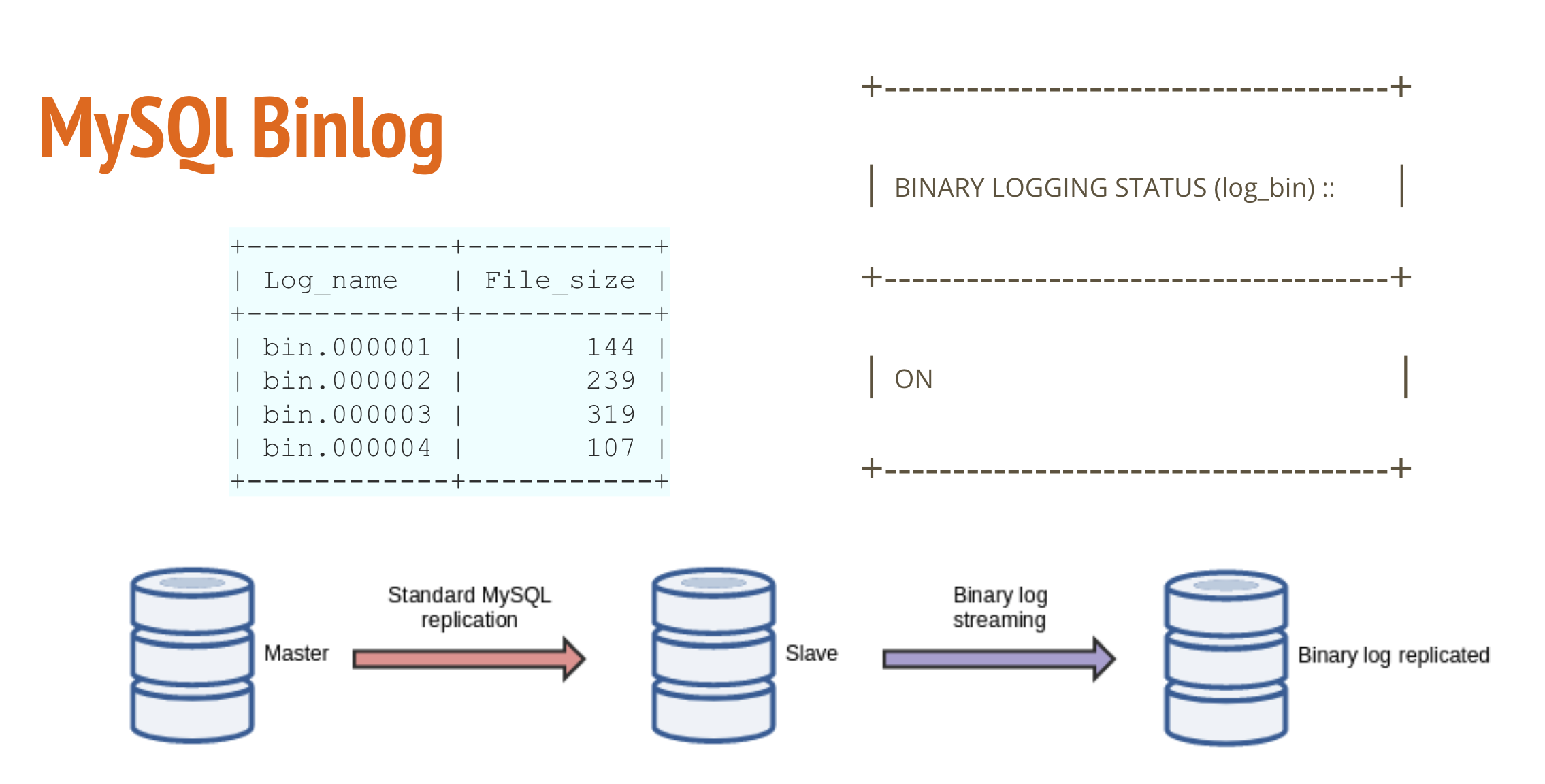

MySQL serves as the primary database for persistent storage of payment data. In this system, mysqlbinlog captures changes in the database and passes them on to downstream services for real-time processing.

Kafka

Kafka is a distributed event streaming platform. The Payment Producer reads changes from mysqlbinlog and publishes them to Kafka topics. The Payment Consumer then subscribes to these topics, processes the incoming data, and sends it to Redis for caching.

Payment Producer

The Payment Producer reads the real-time updates from mysqlbinlog and publishes the relevant data to a Kafka topic, enabling the Payment Consumer to handle the changes in a scalable manner.

Payment Consumer

The Payment Consumer subscribes to the Kafka topic and processes the published data. After processing, it pushes the data into Redis, ensuring that the Payment Service has quick access to the most recent payment information.

Workflow

- MySQL records payment transactions.

- mysqlbinlog captures changes and sends them to the Payment Producer.

- The Payment Producer publishes the changes to a Kafka topic.

- The Payment Consumer subscribes to the Kafka topic, processes the updates, and caches them in Redis.

- The Payment Service accesses the cached data from Redis and interacts with MySQL for additional operations.

This architecture leverages Kafka for event-driven communication, Redis for fast data retrieval, and MySQL for reliable data storage.

Using Mysql Binlog

Here is a Python example of how to read MySQL binlogs using BinLogStreamReader:

|

|

Upcoming articles

In the upcoming articles, I will explore Redis and Kafka in greater depth, focusing on essential commands and practical applications.

How to Use Redis: A detailed guide on using Redis for caching and real-time data management.

- Data structures include strings, lists, hashes, and more

- Redis benchmark results

- Tools/Frameworks like Twemproxy and Redis Sentinel

How to Use Kafka: An in-depth article on using Apache Kafka for distributed messaging and stream processing.

Stay tuned for more!